Minimal definition: computers somewhere else which are always on and which can be accessed over the internet.

What it is (physically)

Computers in data centres

- How many are there?

- Approx. 12k datacenters worldwide

- Datacenters can include a lot of servers e.g. CERN’s data centre contains around 10k servers

- Where are they?

- The US has the most datacenters with around 2,500 facilities

- In Europe, Germany, the UK and France have 300-600 each

- In general, infrastructure/connectively and cheaper power support datacenter development

- These require cooling and are power-hungry

- Some regulatory arbitrage e.g. George Hotz (‘we have a lot of a computers and v high power draw but we are certainly not a data centre’)

Precursors: what makes it possible

- Internet connectivity

- Generally better commercial and residential bandwidth (FTTC/FTTP etc.)

- Phones/4G

- IoT

- Data centres

- Virtualisation — instead of a computer (server) running one operating system, it runs a hypervisor which allows many operating systems to be run at one. Different users can then operate and manage these operating systems as though they have their own computer.

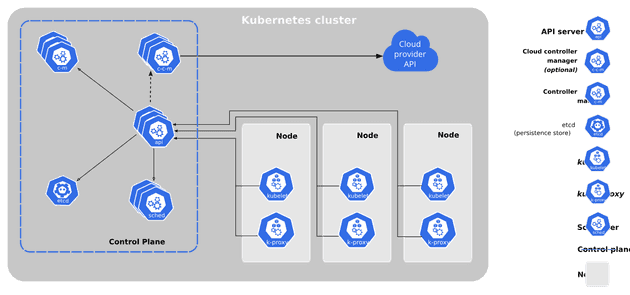

- Containerisation — containerisation is a type of virtualization in which all the components of an application are bundled into a single container image and can be run in isolated user space on the same shared operating system. Containers are lightweight, portable, and highly conducive to automation. The key technologies for automation are Docker and Kubernetes

- Reliability — once you have virtualisation/containerisation and you spread the workload across many servers, if one of them falls over, another can take over, leading to ‘fault tolerance’

- e.g. Amazon realising it can rent its APIs/services

- Jeff Bezos’ API mandate memo

- this is still happening see e.g. FT: How Lidl accidentally took on the big guns of cloud computing

- Extremely high-speed networking within a given data centre and also between data centres from a given cloud provider within a given region (e.g. AWS within Western Europe)

Kubernetes

What it is (more abstractly)

-

Largest providers

- Amazon Web Services (AWS)

- Microsoft Azure

- Google Cloud Platform (GCP)

- Oracle Cloud Platform

- Alibaba Cloud (China)

-

Core/typical cloud offerings (below I use AWS examples)

- Storage — e.g. Simple Storage Service (S3) ‘storage buckets’, Elastic Block Store (EBS) storage that attaches to EC2

- Compute — virtual machines e.g. Elastic Cloud Compute (EC2)

- Databases e.g. Redshift (columnar postgres), Relational Database Service (RDS; Postgres, MySQL, Oracle etc flavours)

- Various other bits

- Message queues e.g. Simple Queue Service (SQS)

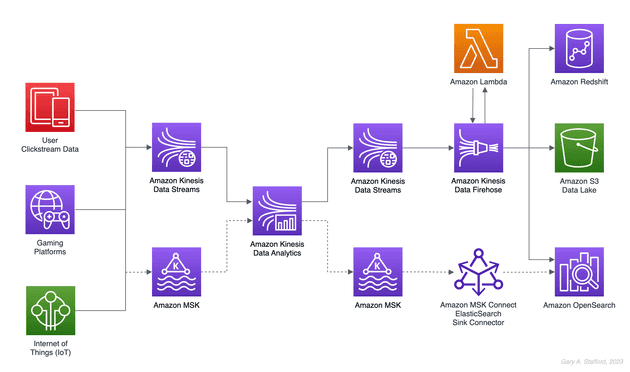

- Streaming e.g. something like Kafka

- Serverless e.g. Lambda

- Container registry e.g. Elastic Container Registry (ECS)

- Container running i.e. managed Kubernetes e.g. EKS (Elastic Kubernetes Service)

- Specific to data — ML/AI e.g. SageMaker — ETL e.g. AWS Glue

Pros and cons

Pros

- Potentially lower costs as costs are in operating expenditure and you ‘pay as you go’

- Certain services scale automatically with the workload, such that computational/storage resources are increased or decreased as required automatically

- High availability i.e. very low to practically no downtime

- Workers can access cloud resources wherever they are in the world, subject to good internet connection and authentication / security privileges

- Server/low level software maintenance — cloud providers usually handle routine maintenance, updates, and security patches, reducing the need for IT staff

Cons

- Data security: cannot reduce the risk of a breach on someone else’s infrastructure to 0

- Use of multiple geographical locations, which the cloud can make quite straight forwards, can make it difficult to comply with relevant laws and regulations e.g. Germany is often a default data centre choice for those in Europe as Germany has the most stringent laws around this sort of thing as I understand it

- Vendor dependency: big cos may have a multi-cloud strategy but this is likely to reduce benefits around cost and efficiency (e.g. consider egress fees between different cloud providers)

- Cost management: a spike in activity could cause a material increase in resources being used, which you will then pay for; in addition because the cost calculations are complex it can be very hard to estimate costs ahead of time

- Latency / speed: particularly when connecting to data centres e.g. on a different continent. Further, the exact location, hardware, and usage patterns of other users will impact on the performance of e.g. your virtual machine / EC2 instance

Relating our other practices

Cloud platforms have revolutionised the way data is stored, processed, and analysed. They offer scalable, flexible, and cost-effective solutions for handling large volumes of data.

Cloud Engineers play a crucial role in leveraging cloud platforms to build and manage data infrastructure. Examples of what they are responsible for:

- Data Warehousing and Data Lakes: Provisioning data warehouses and data lakes to store and organise data.

- ML Infrastructure: Setting up cloud-based infrastructure for machine learning model development and deployment.

- Model Management: Managing machine learning models, including version control, deployment, and monitoring.

- Cost Optimisation: Implementing strategies to reduce cloud infrastructure costs while maintaining performance.

- High Availability: Ensuring data redundancy and fault tolerance to minimise downtime.

- Monitoring & Alerting: Tracking the health and performance of cloud resources, data pipelines and applications.

- Access Controls: Managing access controls to ensure data security and compliance.

Mindset of a Cloud Engineer

Cloud Engineers possess a mindset that is characterised by a strong focus on efficiency, reliability, and cost-optimisation. Here are some key aspects of their mindset:

Automation-First Approach:

- Efficiency: Cloud Engineers strive to automate as many tasks as possible to reduce manual effort and errors.

- Consistency: Automation ensures that infrastructure and processes are deployed and managed consistently.

- Scalability: Automated provisioning makes it easier to scale resources up or down based on demand.

Security and Compliance:

- Risk Mitigation: Cloud Engineers prioritise security measures to protect sensitive data and prevent unauthorised access.

- Compliance Adherence: They ensure that cloud infrastructure and processes comply with relevant industry standards (e.g., GDPR, HIPAA).

- Continuous Improvement: Security is an ongoing process, and Cloud Engineers continually evaluate and update security measures.

Cost Optimisation:

- Resource Efficiency: Cloud Engineers seek to optimise resource utilisation to minimise costs.

- Cost-Effective Solutions: They explore and implement cost-saving strategies, such as reserved instances, spot instances, and serverless computing.

- Cost Monitoring: Regular monitoring and analysis of cloud spending helps identify areas for optimisation.

Reliability and High Availability:

- Redundancy: Cloud Engineers design infrastructure with redundancy to ensure high availability and minimise downtime.

- Disaster Recovery: They implement disaster recovery plans to protect against data loss and service disruptions.

- Monitoring and Alerting: Robust monitoring and alerting systems help identify and address issues promptly.

Continuous Improvement:

- Learning and Adapting: Cloud Engineers stay up-to-date with the latest cloud technologies and best practices.

- Experimentation: They are open to experimenting with new tools and techniques to improve efficiency and performance.

- Feedback Loops: Cloud Engineers actively seek feedback from stakeholders to identify areas for improvement.

Other things to know about

-

Often the typical cloud offering is some combination of some open source software running on the required building blocks e.g.

- Amazon Managed Workflows for Apache Airflow (Amazon MWAA) is literally Airflow running on AWS

- At some point ‘the cloud’ just becomes more and more abstracted services

- pay money (increased with use usually), get API to use

- e.g. Supabase – database platform with extra bits so app builders can not worry about databases, where to store user into etc

-

Typical things to know about if you are on the cloud

- It can get complex pretty quickly

Kinesis Data Streams and Amazon MSK can often be used interchangeably in streaming data workflow

-

Understanding common cloud migration strategies can help you effectively engage with potential clients and address their specific needs. Here are some key strategies to be familiar with:

- Lift and Shift involves moving existing applications and data to the cloud without making significant changes to the architecture.

- Benefits: Quick and cost-effective for applications that are well-suited to cloud environments.

- Considerations: May not fully leverage cloud benefits like scalability and elasticity.

- Re-platforming is similar to lift and shift, but involves making minor modifications to the application to optimise it for the cloud environment.

- Benefits: Can improve performance and reduce costs compared to lift and shift.

- Considerations: Requires some technical expertise and may not fully realize cloud benefits.

- Refactoring involves re-architecting applications to take full advantage of cloud-native capabilities like microservices, serverless computing, and containers.

- Benefits: Can significantly improve performance, scalability, and cost-efficiency.

- Considerations: Requires significant technical expertise and may involve a lengthy migration process.

- Lift and Shift involves moving existing applications and data to the cloud without making significant changes to the architecture.

-

DevOps is a cultural and technical approach that aims to break down silos between development and operations teams. It emphasises collaboration, automation, and continuous delivery to deliver software faster and more reliably.

-

Infrastructure as Code (IaC) is a methodology that treats infrastructure resources (like servers, networks, and storage) as code. This means you can manage and provision infrastructure using the same version control systems, automation tools, and testing practices used for application code.

- Common examples of this include AWS CloudFormation, Terraform & ARM Templates

-

Microservices architecture breaks down large applications into smaller, independent services that communicate with each other via APIs.

-

Hybrid Cloud: Combining on-premises and cloud infrastructure to meet specific business needs.

-

Key things to know about when using the cloud / three very basic things to take away:

- Computation, storage, etc can be separated out as long as computers can communicate with each other e.g. via APIs

- The cloud shifts expenditure

- From: capital expenditure (buying on-prem servers) and having specific expertise within the company (e.g. database administrators)

- To: operating expenditure (paying $$$ to AWS/Azure/GCP every month) and having specific expertise within the company (people with cloud understanding)

- The server maintenance piece being outsourced is very helpful, because clearly this is an area of competitive advantage for the cloud provider and they are very, very good at maintaining vast numbers of servers in the data centre given that it is core to their business

- Although in theory it makes things easier, two things that can easily go awry in the cloud without the right expertise and decision making

- Security

- Architecture/complexity

- People forgetting to turn off resources after they spin them up

Further reading

- For a bullish view see Mark P. Mills’ “The Cloud Revolution”. His core thesis is that there is significant synergy between advancements in computing power, analytics and connectivity and that we will continue to see innovation and convergence between information technology (cloud), biotech (CRISPR, personalised medicine) and new energy technologies.

- A bit more on the Jeff Bezos API mandate in this gist